English | MP4 | AVC 1280×720 | AAC 44KHz 2ch | 2h 11m | 306 MB

This course will help the data scientist or engineer with a great ML model, built in TensorFlow, deploy that model to production locally or on the three major cloud platforms; Azure, AWS, or the GCP.

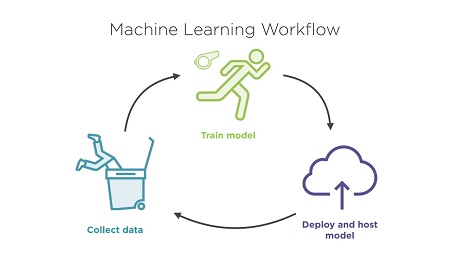

Deploying and hosting your trained TensorFlow model locally or on your cloud platform of choice – Azure, AWS or, the GCP, can be challenging. In this course, Deploying TensorFlow Models to AWS, Azure, and the GCP, you will learn how to take your model to production on the platform of your choice. This course starts off by focusing on how you can save the model parameters of a trained model using the Saved Model interface, a universal interface for TensorFlow models. You will then learn how to scale the locally hosted model by packaging all dependencies in a Docker container. You will then get introduced to the AWS SageMaker service, the fully managed ML service offered by Amazon. Finally, you will get to work on deploying your model on the Google Cloud Platform using the Cloud ML Engine. At the end of the course, you will be familiar with how a production-ready TensorFlow model is set up as well as how to build and train your models end to end on your local machine and on the three major cloud platforms. Software required: TensorFlow, Python.

Table of Contents

Course Overview

1 Course Overview

Using TensorFlow Serving

2 Module Overview

3 Prerequisites and Course Overview

4 The Machine Learning Workflow – Local Serving

5 Demo – Exploring the Churn Prediction Dataset

6 Demo – Training and the Experiment Function

7 The Saved Model

8 The TensorFlow Model Server

9 gRPC and Protocol Buffers

10 Demo – Setting up the Azure VM

11 Demo – Installing TensorFlow gRPC Serving APIs and the Model Server

12 Demo – Deploying and Hosting the MNIST Classification Model

13 Demo – Setting up the Churn Model

14 Demo – Training and Saving the Model

15 Demo – Making Predictions from a Saved Model

Containerizing TensorFlow Models Using Docker on Microsoft Azure

16 Module Overview

17 Azure ML IaaS and PaaS Options

18 Containers and VMs

19 Demo – Docker CE Install

20 Demo – Building the Docker Image

21 Demo – Running a Docker Container for Predictions

22 Demo – Registering the Image with Docker Hub

23 Demo – Running Docker Using the Docker Hub Image

24 Demo – Making Predictions from a Saved Model Using a Docker Container

Deploying TensorFlow Models on Amazon AWS

25 Module Overview

26 The Machine Learning Workflow – SageMaker

27 Training the Model

28 Deploying the Model

29 Training and Inference Code Interface

30 Demo – Setting up an S3 Bucket

31 Demo – Setting up a Notebook Instance

32 Demo – Data Preparation

33 Demo – Setting up the TensorFlow Model

34 Demo – Training and Deploying the Model

35 Demo – Models and Endpoints

Deploying TensorFlow Models on the Google Cloud Platform

36 Module Overview

37 Cloud ML Engine vs. SageMaker

38 The Machine Learning Workflow – Cloud ML Engine

39 Training the Model

40 Deploying the Model

41 Demo – Connecting to Datalab

42 Demo – Creating a GCS Bucket

43 Demo – Data Preparation

44 Demo – Setting up Bucket Permissions

45 Demo – Python Package Contents

46 Demo – Local Training and Prediction

47 Demo – Distributed Training and Deployment

48 Demo – Making Predictions Using Cloud ML Endpoints

49 Summary and Further Study

Resolve the captcha to access the links!